Introduction

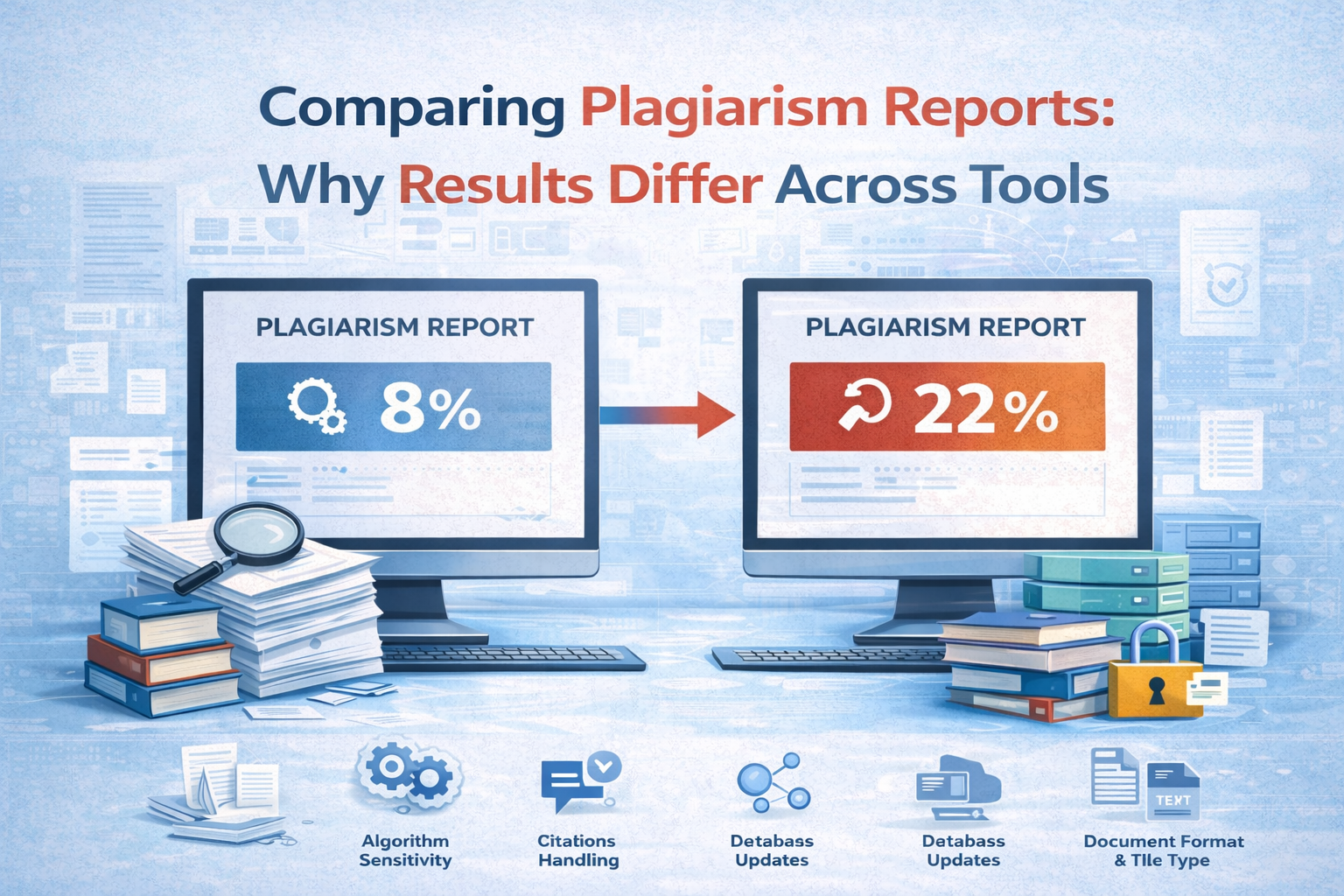

For PhD scholars in India, especially those enrolled in private universities, a plagiarism report is often the final checkpoint before thesis submission. However, many students face a puzzling situation — they run their document through two different plagiarism detection tools and get two completely different results. One tool may show 8% similarity, while another may display 22% for the same content. This raises an important question: why do plagiarism reports vary so much? Understanding the reasons behind these differences is crucial, as it can affect your academic evaluation, compliance with university norms, and even the acceptance of your work.

Different Databases and Sources

One of the biggest reasons plagiarism reports differ is the database each tool uses. Turnitin, for example, has access to a vast repository of academic papers, journals, books, and student submissions from around the world. Urkund, on the other hand, focuses heavily on academic publications and institutional databases. Free tools often rely on web content indexed by search engines. This means that if a source is not part of a tool’s database, the similarity may go undetected, resulting in a lower percentage.

Variation in Algorithm Sensitivity

Every plagiarism detection software uses its own algorithm to compare text. Some tools match exact words and phrases, while others also detect sentence restructuring or paraphrased content. For example, Grammarly’s plagiarism checker is good for spotting direct matches but may not be as effective in identifying well-paraphrased text. Turnitin is more advanced in this aspect, detecting similarities even when the sentence structure is changed. This difference in sensitivity often causes variation in similarity scores.

Inclusion or Exclusion of Quotations and References

Universities often ask for plagiarism reports that exclude properly cited quotations and bibliography. However, not all tools handle this equally well. Some free tools include citations and references in their similarity count, which can increase the percentage. Turnitin and Urkund usually have settings that allow exclusion of these sections, leading to lower and more accurate similarity scores when configured correctly.

Updates in Tool Databases

Plagiarism detection databases are updated regularly, but the timing differs between platforms. If you check your thesis with one tool today and another tool a week later, the second tool may have more recent sources indexed. This can result in a higher or lower similarity percentage depending on whether new matches were found.

Document Formatting and File Types

Surprisingly, even the format of your document can impact the results. Some tools may have difficulty reading PDFs with complex formatting, tables, or images. This could lead to sections being skipped during the scan, lowering the similarity percentage. Uploading a clean, text-based version of your thesis usually produces more consistent results.

Differences in Report Interpretation

A plagiarism percentage is only one part of the report — the interpretation matters too. Two tools may both detect the same matches, but one might classify them as minor overlaps, while the other counts them fully toward the total percentage. This is why it’s essential to go beyond the number and read the detailed matches to understand whether the flagged content is genuine plagiarism or acceptable academic overlap.

Institutional Preferences and Settings

Private universities in India often have their own settings when using plagiarism tools. For example, a university’s Turnitin license might be set to exclude matches under 8 words, while another institution may count matches as short as 3 words. These configurations can significantly alter the similarity percentage, even when using the same tool.

Why Scholars Should Check with More Than One Tool

Given all these variations, relying on a single tool may not give the full picture. Many experienced researchers run their thesis through at least two different plagiarism checkers before submission. This helps in identifying content that one tool may have missed and ensures compliance with institutional guidelines. However, it’s important to remember that no tool is 100% perfect — human judgment is always needed to interpret results accurately.

Conclusion

Different plagiarism tools produce different results because of variations in databases, algorithms, sensitivity levels, and settings. For PhD scholars in private universities, understanding these differences can prevent unnecessary panic over high similarity percentages or false confidence from low scores. Always review the detailed matches, not just the overall percentage, and seek guidance from your supervisor if the results are unclear. Ultimately, the goal is to maintain academic integrity while ensuring that your work meets your institution’s standards.